Unlike the ubiquitous video cameras found on nearly all ROV and AUV platforms, the image data collected by machine vision cameras are not primarily intended for human perception. The video is not fed back to a monitor where a pilot maneuvers a remote robotic system around a subsea structure or seabed feature. Nor does it capture a scene as a visual proxy in order to transport a person to a time and place that would be otherwise difficult, or even impossible, to experience. Instead, machine vision systems are built for our digital proxies, the computational sidekicks that increasingly form the bedrock of new technologies that improve the accuracy, efficiency, and reach of subsea operations through machine learning models and the new wave of artificial intelligence algorithms.

The Human Eye

The human visual system is the product of millions of years of evolution and is very good at filling in the gaps between what we see and what we observe. This is why, for instance, most people are not aware of the blind spot just off-center on either side of our visual field where the optic nerve enters the eye.

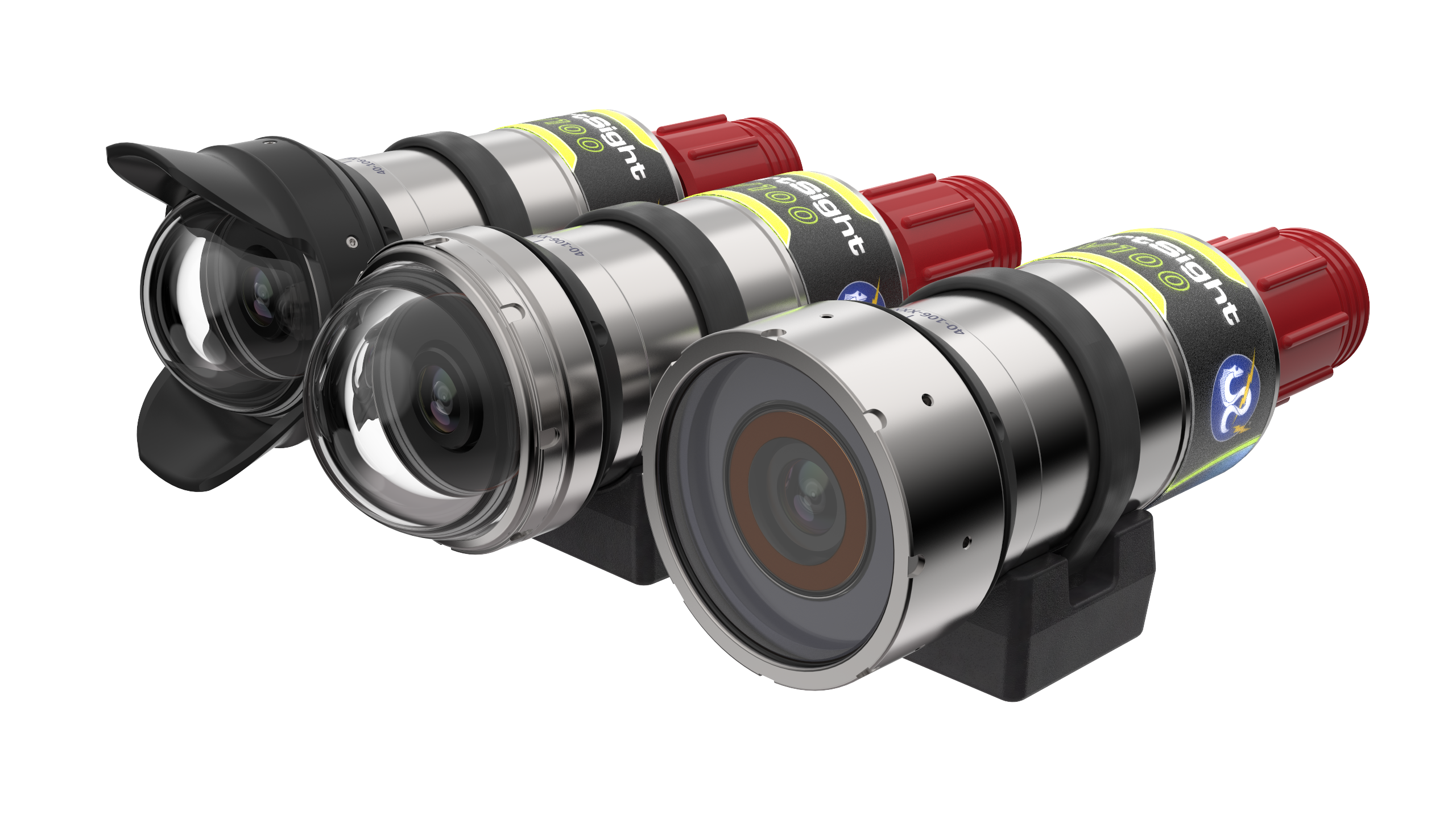

The first machine vision camera in DeepSea’s SmartSight series, the MV100, can produce up to 5MP images and includes multiple lenses and housing configurations. (Image credit: DeepSea)

In most situations, humans are not aware that the fidelity of our color perception is several orders of magnitude less than that of our sensitivity to light and dark except for a very small area at the center of our vision. Engineers take advantage of this to reduce the bandwidth in video signals by committing half of the signal to bright and dark differences and splitting the remaining half into the color components. For most of the history of broadcast television, interlaced frames consisting of alternating odd and even rows were employed to double the effective refresh rate of the image without needing to double the effective frame rate. The human visual system is able to fill in the gaps of the lower fidelity color or spatial information, and—in most situations—we are not aware that this is happening.

Video compression and image enhancement, along with other capture and processing steps employed in human-centric imaging, often take advantage of quirks of our visual processing abilities. However, replicating these tricks for a computational visual system is not very practical. This is a significant reason why cameras developed for remote operation by people are sub-optimal for machine vision applications. Instead, machine vision cameras emphasize deterministic image acquisition, control over exposure, access to raw unprocessed pixel data, tight time correlation between nearby pixels, and interface standardization.

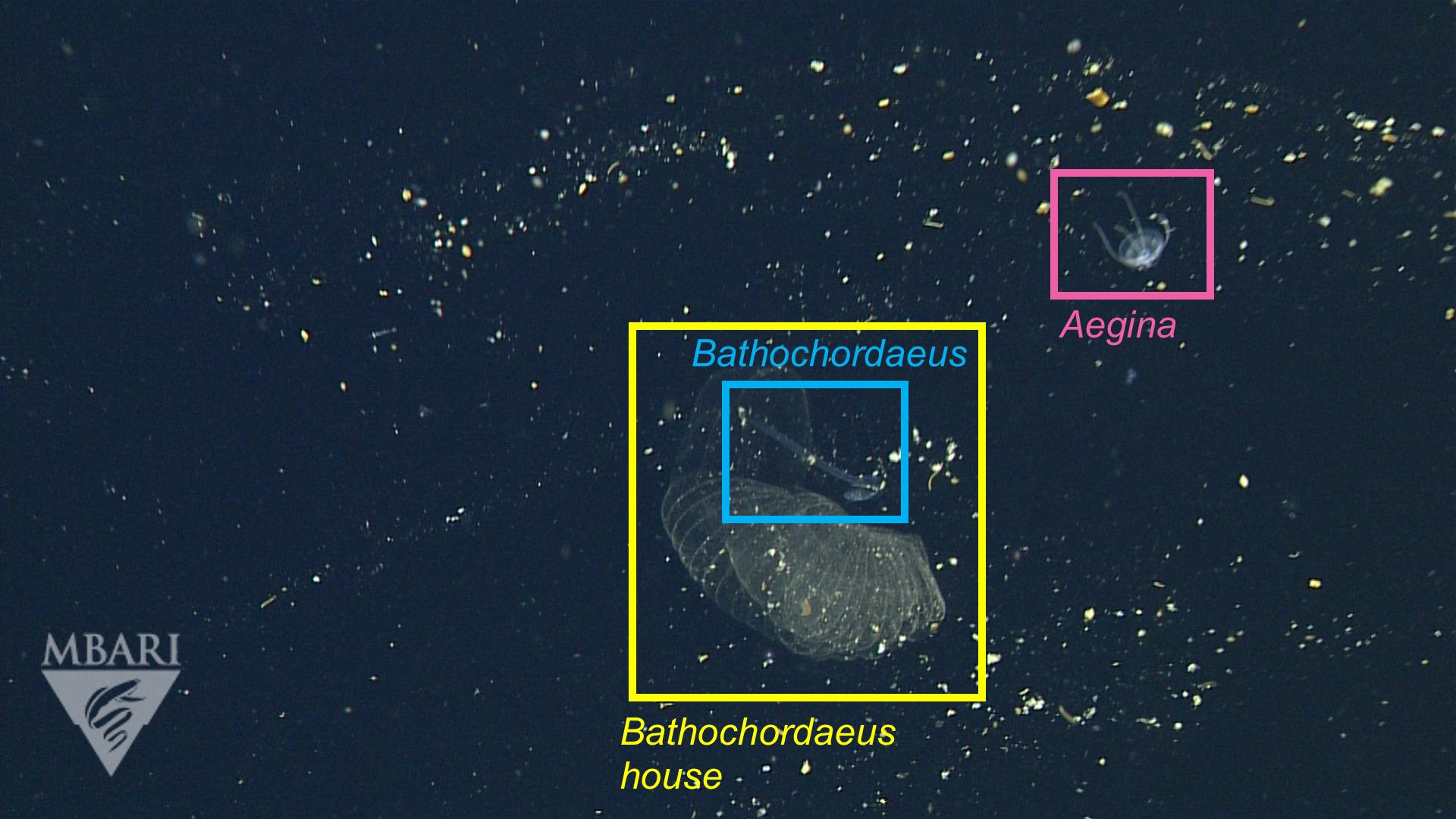

Deep-sea animal identifications using machine-learning algorithms trained with the FathomNet image database. (Image credit: MBARI)

Auto exposure, color correction, white balance, and noise suppression features of conventional video cameras, while helpful for general imaging applications, manipulate the data in every pixel of an image from what was originally read out from the sensor. According to Dr. Paul Roberts, Senior Research Engineer in the Bioinspiration Lab at the Monterey Bay Aquarium Research Institute (MBARI), “You don’t want the raw pixel data to change, and you don’t want the appearance of things to change based on automated algorithms which have non-linear behavior.” In many machine learning models, “the pixel-level details actually matter a lot so being able to control what processing is done is one of the biggest [needs].”

Within the industrial machine vision sector, camera technology is mature and has coalesced around a few standard physical interfaces such as GigE Vision, USB3 Vision, and CoaXPress, as well as application programming interfaces (APIs) like GenICam. This supports broad interoperability between different manufacturers’ products and allows the hardware to scale in resolution or switch between color and monochrome sensors based on application needs. “We really don’t need color for what we’re trying to do, and the color can actually cause you to have to work a lot harder in the optics to make a nice image. Whereas if we can use direct color illumination with mono sensors then we have much higher image quality,” according to Dr. Roberts.

Camera Applications

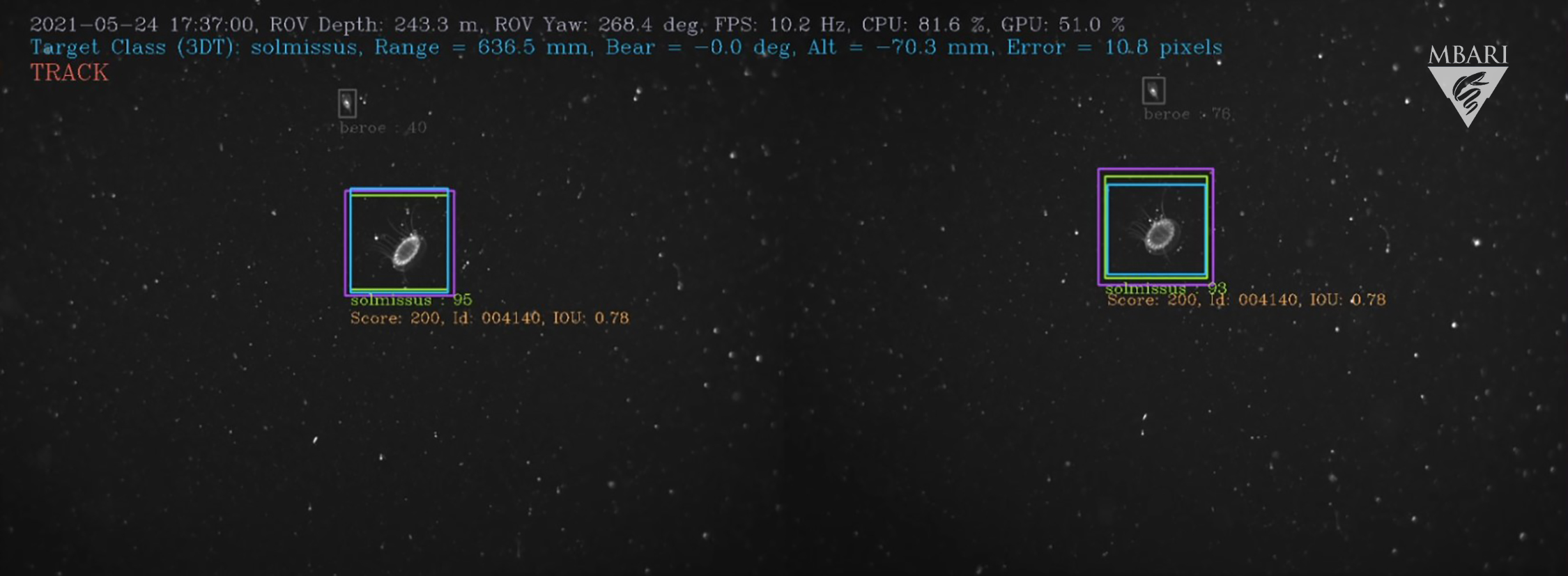

Over the last several years Dr. Roberts has been a part of a team led by Dr. Kakani Katija developing cameras and machine learning models for autonomous object detection and tracking systems, which are tested on MBARI ROV assets. “The project was building a platform which could follow animals around in the ocean, where you want it to be able to identify an interesting animal, approach it, and then follow it,” according to Dr. Roberts. “We used stereo cameras and then we have a system that’s working in real time doing object detection and then triangulation. Then we take that information and feed it into a control system which is then driving the vehicle.” From there, Dr. Roberts says, the algorithm “makes a decision to follow an object, steers the vehicle towards it, and then keeps the object at a certain distance until we tell it to stop or [the target] swims away.” The details of the project are available in the 2021 Winter Conference on Application of Computer Vision (WACV) paper, “Visual tracking of deepwater animals using machine learning-controlled robotic underwater vehicles.”

MBARI’s MiniROV using machine-learning algorithms to identify and track animals in real time. (Image credit: MBARI)

DeepSea Power & Light has introduced new cameras specifically targeting machine vision applications like those in development at MBARI. SmartSight subsea machine vision cameras employ mature industrial global shutter modules compliant with industry standards and offer a range of optics for wide-angle to narrow fields of view with resolutions from 0.4MP to 5.0MP in both color and monochromatic formats. With this array of options built on the proven HD Multi SeaCam platform, the SmartSight MV100 line of cameras can accelerate machine vision projects and provide interoperability with scalability in resolution and sensitivity. This mix of features makes the SmartSight MV100 family well suited for use with machine learning models as well as a tool for collecting high-quality training data for creating and improving training data sets.

Machine vision technologies are at a tipping point in the subsea industry, where the range of applications and uses is accelerating rapidly. Understanding how machine cameras are different from conventional cameras and how that impacts the viability of a machine learning application is important to successful projects. Ultimately, this new way of “seeing” opens up exciting new avenues for learning more about our deep-sea environments and may play a key role in subsea research for years to come.

To find out more about DeepSea’s product lines and applications, visit: https://www.deepsea.com/

This feature appeared in Environment, Coastal & Offshore (ECO) Magazine's 2023 Deep Dive III special edition Deep-Sea Exploration, to read more access the magazine here.